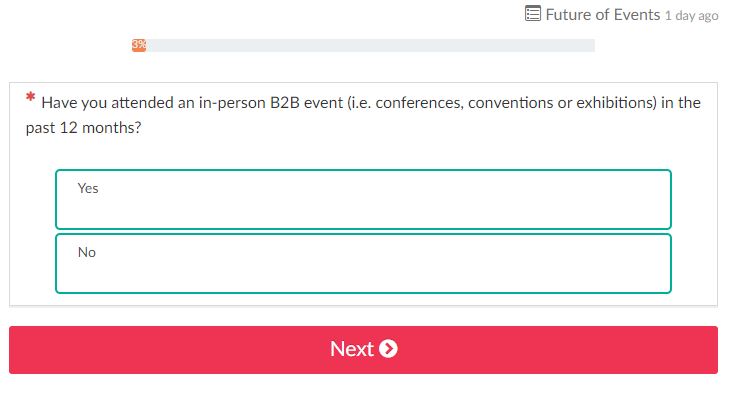

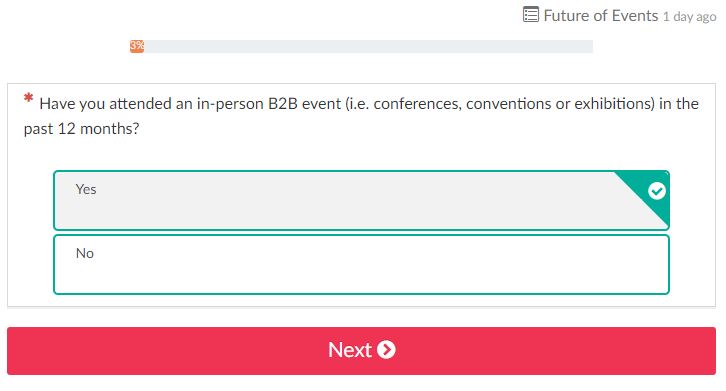

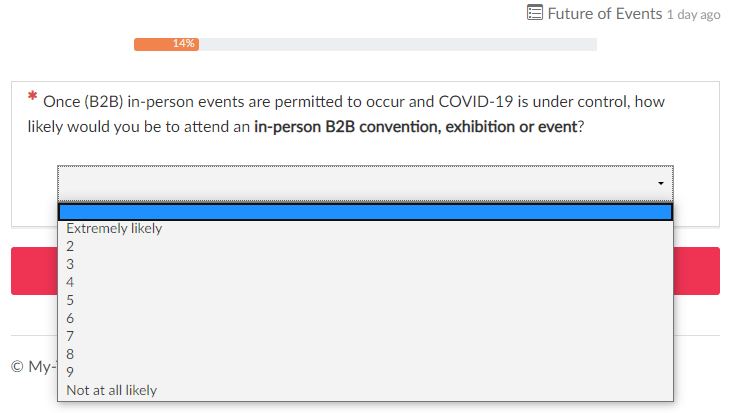

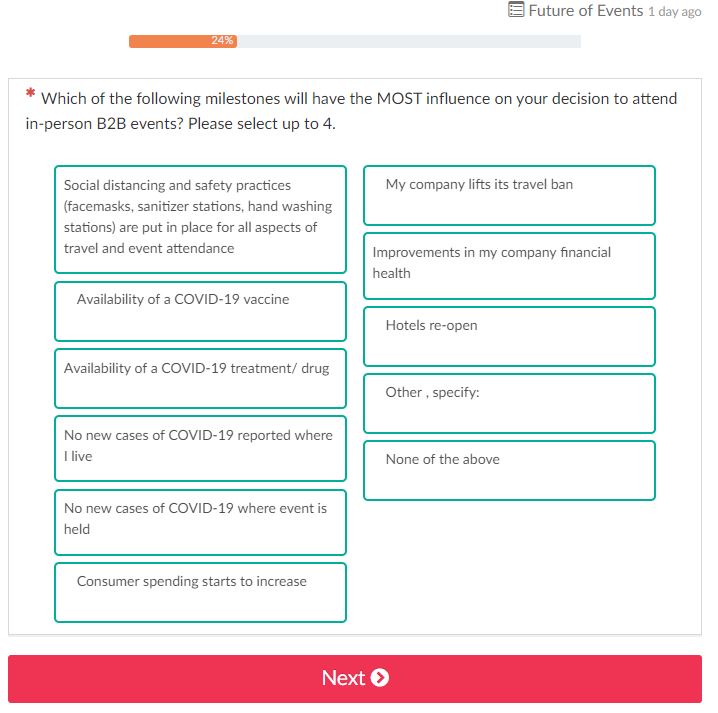

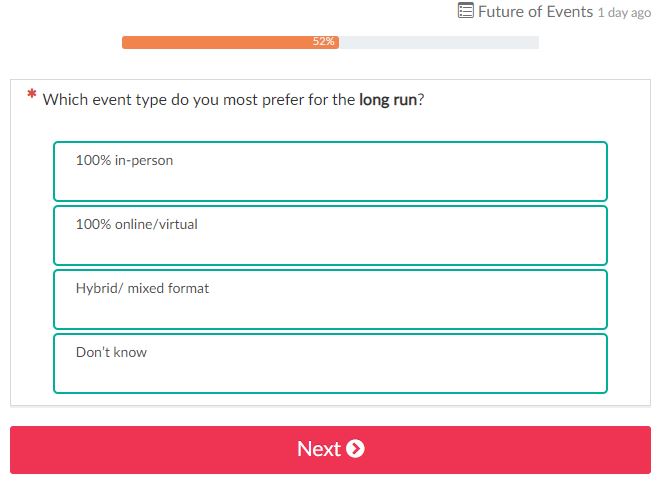

Julian Abich, Ph.D., Senior Human Factors Engineer Julian Abich, Ph.D., Senior Human Factors Engineer I'll start this out like every blog post in 2020…COVID-19 sucks and has thrown a wrench into everything! From a professional perspective, it has affected us all in different ways, but overall it has forced us to rethink the way we conduct our work. As human factors|UX/UI researchers, this has greatly limited our ability to conduct live field research with human participants. Because of this, methodologies to collect data right now are focused heavily on online or remote approaches. Specifically, web-based surveys may be flooding your inbox. The general use of surveys have been around for quite some time, at a minimum of over a century within the U.S. (Converse, 1987). Web-based methods have been in use since the early days of the internet. Some state it was one of the most significant advances in survey technology within the 20th century (Dillman, 2000). "With great power comes great responsibility" (I'll attribute this quote to the late and great Stan Lee). Okay, so survey writing may not be a superpower, but it is a powerful tool for collecting quantitative and qualitative data when implemented correctly. Actually, if I can understand a person's behavioral patterns based on collected data, then that kind of gives me the power to predict the future. I guess I do have superpowers!  Despite the longevity and broad applications of surveys, I constantly come across poorly designed online surveys and it hurts my human factors brain. This is likely because anyone can create a survey if they want, especially web-based, but this doesn't mean you should. So what should you do? Work with professionals who are trained to extract valuable information from participants or respondents. It may seem like an easy task to do on your own, but being able to generate a valid and effective survey is as much of an art as it is a science. You don't become a great scientist or artist overnight, it takes time and experience to hone those skills.  Think about it this way, if you're planning to use the data collected from a survey to drive decisions about a product design, an event, or whatever, don't you think it's important to gather the most informative data possible? Especially if these products or events cost hundreds of thousands to millions of dollars to develop. I'll answer for you…YES! So why are there still so many issues with online surveys? I say it's probably because people don't know what they don't know. They aren't aware or trained how to create appropriately worded questions, or organize the flow, or understand the factors that might influence a person's response to a question. And therefore, they end up drawing conclusions that don't accurately reflect respondents' views, behaviors, or beliefs. The end result may be a poorly designed product that no one wants to use. As I am writing this I received another request to fill out a survey. This one is to get feedback on future audiovisual events and gain insight on future needs to help produce in-person and virtual events. Let's see how well this survey is designed or if there are ways it can be improved. Target the right audience. The email was sent to me because I attended a related conference last year. So far so good. Randomly sampling the population is another way to collect survey data and is what makes web-based surveys so attractive, but sampling techniques should be dependent on the context of the survey. Always spell out acronyms. Not all respondents are going to know what the acronyms are so it's best to make sure they are always spelled out, at least the first time they are used. Below is an example of the first question that is asked. Besides the question, do you notice any other potential issues with the format or design of the form? (Don't ask me what "Future of Events 1 day ago" means because I have no idea) This is what the form looks like when you choose an answer. See any other issues with how it's designed? Maybe a color selection issue? Rating scales should align with the questions and be consistent. First, the scale should make sense when associated with a question. When Likert-type scales are used to gather responses, the lower end of the scale usually refers to less agreement, frequency, importance, likelihood, or quality. Do you see an issue with the scale below? Second, anchor labels could be used to show the extreme ends of the scale, but should still have an associated value (although it's best to have labels and values for every level of the scale to avoid confusion). Third, a label for the center of the scale should be provided because the middle may be assumed to be a neutral response. Fourth, the scales should be consistent as much as possible throughout the survey. Meaning, if you're using a 10-point scale, the don't make the next question a five- or seven-point as seen in the images below. This just makes answering questions more challenging for the respondents. Avoid double barreled questions. The questions above are also designed poorly because there are multiple items in the questions that the respondents might not agree with. Asking the respondents if they prefer "workshops, panels, and training" to be online is taking away the ability for respondents to agree with one of those only. It may seem tedious, but these types of questions need to be reworded or broken out into separate questions. Avoid leading and loaded questions. Do not force respondents to provide answers that don't truly reflect their sentiment. Below it asks respondents to select up to four, but what if respondents only agreed with one or two items? Now you're forcing respondents to provide biased answers that don't truly reflect how they feel or would behave. See a problem with this? Rephrasing the question to say they could choose up to four is different than saying they have to choose them. Make sure the question wording is clear and well defined. Don't leave the wording of questions open for interpretation (unless that is intentional). Every included word should be chosen with a clear purpose. If you asked different respondents what "long run" meant, you would probably receive many different answers. These are just some examples to highlight the challenges with creating a valid survey, although there are many other issues that can arise. It may not be obvious until you sit down to analyze the data that you have a complex data set and it's difficult (or impossible) to generate appropriate conclusions for decision-making. Don't waste your time and resources collecting worthless data when you can do it correctly the first time. You'll be happy, your boss will be happy, your customers will be happy, and the respondents will be happy to know their opinions matter. Contact us if you have any questions (no pun intended) or need support with your data collection efforts. References:

Converse, J. M. (1987). Survey research in the United States: Roots and emergence, 1890–1960. Berkeley, CA: University of California Press. Dillman, D. A. (2000). Mail and Internet surveys--The tailored design method. New York : John Wiley & Sons, Inc.

0 Comments

Your comment will be posted after it is approved.

Leave a Reply. |

AuthorsThese posts are written or shared by QIC team members. We find this stuff interesting, exciting, and totally awesome! We hope you do too! Categories

All

Archives

June 2024

|

RSS Feed

RSS Feed