|

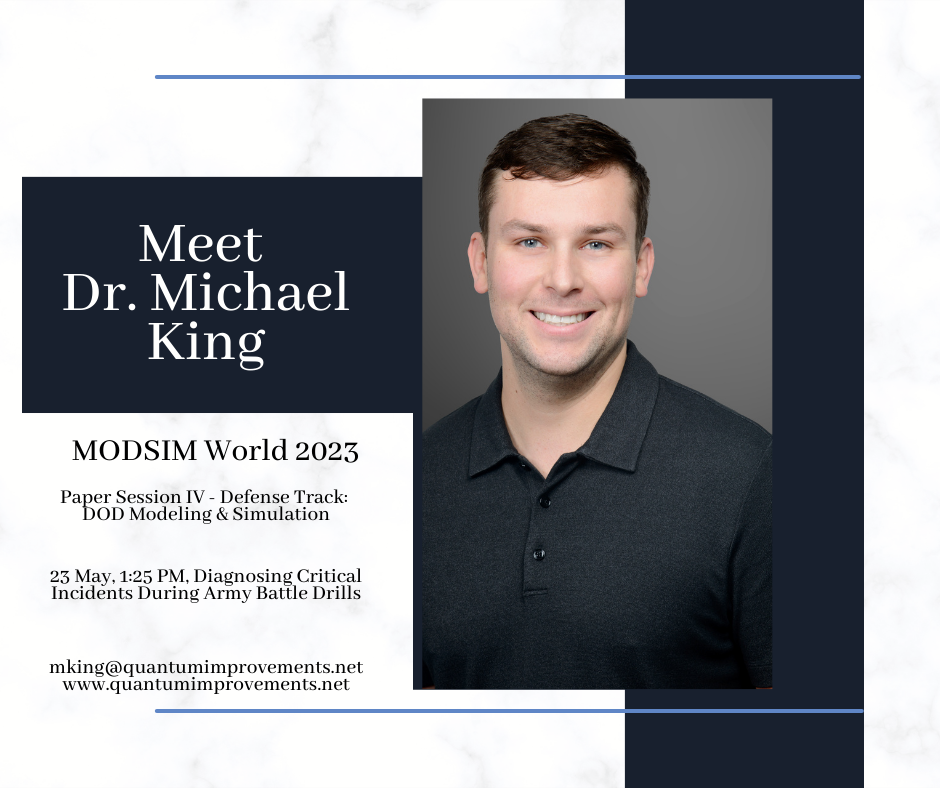

In military training environments, critical incidents such as injury, friendly fire, and non-lethal fratricide may occur. Quantitative performance data from training and exercises is often limited, requiring more in-depth case studies to identify and correct the underlying causes of critical incidents. The present study collected Army squad performance, firing, and communication data during a dry-fire battle drill as part of a larger research effort to measure, predict, and enhance Soldier and squad close combat performance. Soldier-worn sensors revealed that some quantitatively rated top-performing squads also committed friendly fire and a fratricide. Therefore, case studies were conducted to determine what contributed to these incidents. This presentation aims to provide insight into squad performance beyond quantitative ratings and to underscore the benefits of more in-depth analyses in the face of critical incidents during training. Squad communication data was particularly valuable in diagnosing incident root causes. For the fratricide incident specifically, the qualitative data revealed a communication breakdown between individual squad members stemming from a non-functioning radio. The specific events leading up to the fratricide incident, and the squad’s response, will be discussed along with squad communication patterns among high and low-performing squads in the context of various critical incidents. We will examine how the conditions surrounding critical incidents and the underlying causes of those incidents can be recreated and manipulated in a simulated training environment, allowing instructors to control the incident onset and provide timely feedback and instruction.

0 Comments

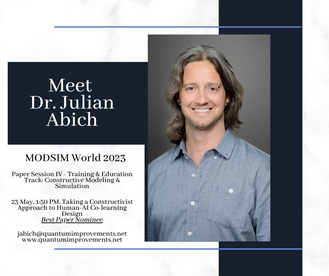

Artificial intelligence (AI) can facilitate personalized experiences shown to impact training outcomes. Trainees and instructors can benefit from AI-enabled adaptive learning, task support, assessment, and learning analytics. Impacting the learning and training benefits of AI are the instructional strategies implemented. The co-learning strategy is the process of learning how to learn with another entity. AI co-learning techniques can encourage social, active, and engaging learning behaviors consistent with constructivist learning theory. While the research on co-learning among humans is extensive, human-AI co-learning needs to be better understood. In a team context, co-learning is intended to support team members by facilitating knowledge sharing and awareness in accomplishing a shared goal. Co-learning can also be considered when humans and AI partner to accomplish related tasks with different end goals. This paper will discuss the design of a human-agent co-learning tool for the United States Air Force (USAF) through the lens of constructivism. It will delineate the contributing factors for effective human-AI co-learning interaction design. A USAF maintenance training use case provides a context for applying the factors. The use case will highlight the initiative of leveraging AI to help close an experience gap in maintenance personnel through more efficient, personalized, and engaging support.

Extended reality (XR) technologies have been utilized as effective training tools across many contexts, including military aviation, although commercial aviation has been slower to adopt these technologies. While there is hype behind every new technology, XR technologies have evolved past the emerging classification stage and are at a state of maturity where their impact on training is supported by empirical evidence. Diffusion of innovation theory (Rogers, 1962) presents key factors that, when met, increase the likelihood of adoption. These factors consider the relative advantage, trialability, observability, compatibility, and complexity of the XR technology. Further, there are strategic approaches that should be implemented to address each of these innovation diffusion factors. This presentation will discuss each diffusion factor, provide exemplar use cases, and outline evidence-backed considerations to improve the probability of XR adoption for training. Considerations will discuss various effects that may occur with the introduction XR technology, such as the novelty effect where improved performance initially improves due to new technology and not because of learning. Key questions will be presented that should be addressed under each diffusion factor that will help guide the information needed to support the argument for XR adoption. Importantly, the quality of research evidence to support XR implementation and adoption is critical to reducing the risk of ineffective training. Therefore, a discussion of research-related considerations will also be presented to ensure an appropriate interpretation of existing XR research literature. The goal is to provide the audience with an objective lens to help them determine whether XR technologies should be adopted for their training needs.  World Aviation Training Summit April 18-20, 2023, Orlando, FL April 20, 11:15 AM, Improving the Probability of XR Adoption for Training The USAF has funded the development of an AI-driven co-learning partner that monitors student learning, predicts learning outcomes, and provides appropriate support, recommendations, and feedback. As a trusted partner, AI co-learning agents have the potential to enhance learning though the challenge is developing an evolving agent that continually meets the learner’s needs as the learner progresses toward proficiency. Taking a user-centered design approach, we derived a series of heuristics to guide the development of an AI co-learning tool for adoption and sustained use then mapped technical feature recommendations onto each heuristic. United States Air Force (USAF) Tactical Aircraft Maintainers are responsible for ensuring aircraft meet airworthy standards and are operationally fit for missions. Aircraft maintenance is the USAF's largest enlisted career detail, accounting for approximately 25% of their active duty enlisted personnel (US GAO, 2019). The USAF has successfully addressed maintenance staffing shortages in recent years, but the challenge has shifted to a lack of qualified and experienced maintainers (GAO, 2022). To address this issue, the USAF is seeking innovative ways to increase maintenance training efficiency, such as using artificial intelligence (AI) in the training environment (Thurber, 2021). Specifically, the USAF has funded the development of an AI-driven co-learning partner that monitors student learning, predicts learning outcomes, and provides appropriate support, recommendations, and feedback (Van Zoelen, Van Den Bosch, Neerincx, 2021). The AI co-learning agent goes beyond being a personalized learning assistant by predicting learning outcomes based on student data, anticipating learner needs, and adapting continuously to meet those needs over time while maintaining trust and common ground. As a trusted partner, AI co-learning agents have the potential to enhance learning by dynamically adapting to student needs and providing unique analytical insights. The challenge is developing an evolving agent that continually meets the learner’s needs as the learner progresses toward proficiency. Our literature review uncovered that designing an effective AI co-learning tool for initial adoption and sustained use requires observability, predictability, directability, and explainability (Bosch et al., 2019). Establishing trust and common ground are important factors that can impact the learner’s confidence in the agent and influence tool usage. Taking a user-centered design approach, we derived a series of heuristics that guide the development of an AI co-learning tool based on the identified factors. We then mapped technical feature recommendations onto each heuristic, resulting in 10 unique and modified heuristics with associated exemplar feature recommendations. These technical features formed part of the design documentation for an AI co-learning tool prototype. The research-driven design heuristics will be presented along with the technical feature recommendations for the AI co-learning tool. The audience will gain practical insight into designing an effective human-AI co-learning tool to address training needs. The application goes beyond the immediate USAF need to other services, such as the U.S. Navy, where maintainer staffing has declined gradually (GAO, 2022).

|

AuthorsThese posts are written or shared by QIC team members. We find this stuff interesting, exciting, and totally awesome! We hope you do too! Categories

All

Archives

June 2024

|

RSS Feed

RSS Feed