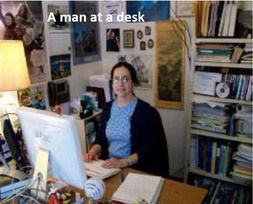

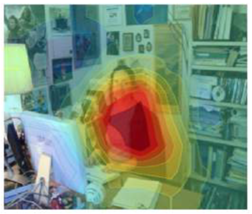

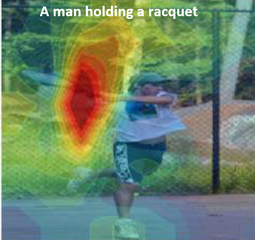

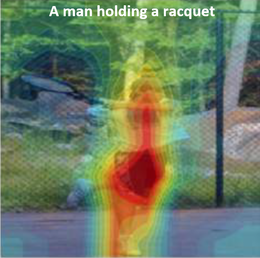

Grace Teo, Ph.D., Sr. Research Psychologist Grace Teo, Ph.D., Sr. Research Psychologist The major challenge with the use of artificial intelligence (AI) is that it is often difficult to explain how AI or machine learning (ML) solutions and recommendations come to be. Previously this may not matter as much because AI’s use was limited and its recommendations were confined to relatively trivial decisions. In the past few decades, however, AI use has become more pervasive and some of these AI solutions are impacting high-stakes decisions, so this problem has become increasingly important. Fueling the urgency are findings that AI solutions can be unintentionally biased, depending on the type of data used to train the algorithms. For instance, the algorithm used by Amazon to hire staff was found to be biased against women because the algorithm was trained on previous data that largely comprised resumes from male applicants (Shin, 2020). Algorithms used by COMPAS (i.e., Correctional Offender Management Profiling for Alternative Sanctions) to predict likelihood of recidivism were also found to be predict that black offenders were twice as likely to reoffend compared to white offenders (Shin, 2020). These are only two of many instances of algorithm bias. These algorithms tend to be from “black box” models that are developed from powerful neural networks performing deep learning. These neural networks are usually what researchers have to use for computer vision and image processing which are less amenable to other AI/ML techniques. Researchers in the field of explainable AI (or XAI) have tried to shine a light into these “black boxes” in different ways. For instance, one popular way to help explain deep learning AI processing images is to use heat or saliency maps that show the regions/pixels of a picture that seem to highly influence the algorithm’s prediction (i.e., what the network is “paying attention to”). If these regions aren’t relevant to the algorithm’s task at hand, then the researcher may be looking at a biased algorithm. In her recent talk at a Deep Learning Summit, Rohrbach (2021) showed an example of wrong captioning by a black box model, mislabeling the woman sitting at a desk in front of a computer monitor as “a man” (Figure 1a). The saliency map showed that the network was attending more to the computer monitor than the person in the picture (Figure 1b), when it should have been focusing more on the person (Figure 1c). Presumably the bias arose because the training data contained more images of men sitting in front of computers than women doing the same. Saliency maps also provide a way to evaluate the “logic” of the algorithm even when the captioning seems appropriate (see Figures 2a & 2b). A major criticism of such saliency maps is that they only show the inputs of importance in deriving the algorithm. They do not reveal how these inputs are used. For instance, if two models had different captions or predictions but had very similar saliency maps, the maps would not explain how the models reached their different predictions (Wan, 2020). Due to such limitations, other researchers such as Dr. Cynthia Rudin, propose that instead of making AI explainable, interpretable models should be developed instead. The difference being that interpretable models are inherently understandable, and the researcher is able to see how the model derives its solutions and algorithms, instead of merely trying to coax explanations from a model by reviewing what inputs were more influential than others after the algorithm has been developed (Rudin, 2021). Dr. Rudin’s work to make neural networks interpretable has received wide acclaim and she is a strong proponent of using interpretable models especially for high stakes decisions (Rudin, 2019). However, as she stated in the recent Deep Learning Summit, Dr. Rudin also admitted that developing such interpretable models takes more time and effort. This is because unlike black box models where researchers would not know if the model is working properly, interpretable models force researchers to work to troubleshoot the data when they see that the model is not working as it should – even if the solutions look ok (Rudin, 2021). Nevertheless, there are some who still argue for the use of black box models that are low on explainability. Black box models are harder to copy and can give the company that developed them a competitive advantage. They are also easier to develop. Proponents of this view believe that the goal is not to explain every black box model but to identify when to use black box models (Harris, 2019). If a black box algorithm is able perform its task to a high degree of accuracy, we may not need to know exactly how it did it. Besides, what is a valid explanation to one may not be a valid explanation to another. These researchers argue that even physicians use things that they do not fully understand all the time, to include common drugs that have been shown to be consistently effective even though no one totally comprehends how they work in every patient. What is important is that that enough testing is done to ensure that the algorithm is dependable and suitable for its intended use (Harris, 2019). When I first learned and read about AI and the problem of inexplicability some years back, I remember taking the position that the ends justified the means. That is, so long as the algorithm was accurate I’d be willing to sacrifice explainability. However, as I learn more about how people are using AI solutions for all sorts of important medical, hiring, and criminal justice decisions, I started to reconsider my position. After attending the Deep Learning Summit on Explainable AI (XAI) earlier this year, I am more inclined to think that it really depends on the application and type of decision involved. Just because a black box model has a sterling track record of highly accurate predictions in the past does not mean that it is not possible for the next prediction to be poor. The higher stakes the decision is, the better understanding we should have of the workings of the AI. What do you think? For what applications or decisions would you accept a model that is unexplainable but have been consistently accurate? References

Harris, R. (2019). How can doctors be sure a self-taught computer is making the right diagnosis? Retrieved from https://www.npr.org/sections/health-shots/2019/04/01/708085617/how-can-doctors-be-sure-a-self-taught-computer-is-making-the-right-diagnosis Rudin, C. (2019). Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nature Machine Intelligence, 1(5), 206-215. Rudin, C. (2021). Stop Explaining Black Box Machine Learning Models for High Stakes Decisions and Use Interpretable Models Instead. Deep Learning Summit 2021. Rohrbach, A. (2021). Explainable AI for addressing bias and improving user trust. Presented at the Deep Learning Summit 2021. Shin, T. (2020). Real-life Examples of Discriminating Artificial Intelligence: Real-life examples of AI algorithms demonstrating bias and prejudice. Retrieved from https://towardsdatascience.com/real-life-examples-of-discriminating-artificial-intelligence-cae395a90070 Wan, A. (2020). What explainable AI fails to explain (and how we fix that). Retrieved from https://towardsdatascience.com/what-explainable-ai-fails-to-explain-and-how-we-fix-that-1e35e37bee07

0 Comments

|

AuthorsThese posts are written or shared by QIC team members. We find this stuff interesting, exciting, and totally awesome! We hope you do too! Categories

All

Archives

June 2024

|

RSS Feed

RSS Feed